Introduction

AI-generated content refers to text created or assisted by artificial intelligence systems trained on large datasets. Its rapid adoption has changed how content is planned, produced, and scaled, raising questions about quality, trust, and long-term impact.

While AI can accelerate parts of the content process, it does not replace the judgment, experience, or accountability required for credible publishing. Used without standards, it can introduce errors, flatten originality, and erode trust.

This article explains what AI-generated content is, where it adds value, where it falls short, and how to use it responsibly within a content marketing system.

Key Takeaways

- AI-generated content is a production tool, not a content strategy.

- Human judgment is essential for quality, accuracy, and trust.

- AI works best when supporting planning, drafting, and updating tasks.

- Risks increase when AI is used to replace oversight rather than assist it.

- Clear standards and governance determine whether AI content helps or harms credibility.

Disclaimer: I am an independent Affiliate. The opinions expressed here are my own and are not official statements. If you follow a link and make a purchase, I may earn a commission.

What AI-generated content actually is

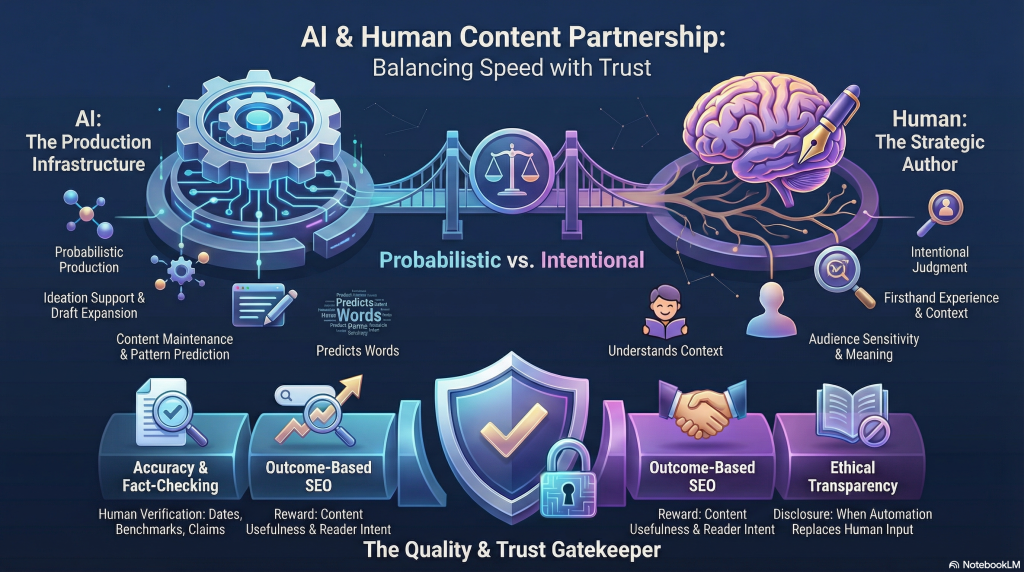

AI-generated content is created by language models that predict text based on patterns learned from large collections of existing material. These systems do not understand topics, verify facts, or form opinions. They generate responses by estimating what words are likely to come next given a prompt.

This distinction matters because AI output is probabilistic, not intentional. It can sound confident while being incomplete or incorrect, and it does not distinguish between strong sources and weak ones unless guided carefully. The quality of the result depends heavily on the input, constraints, and human review that follow.

AI-generated content is also not inherently original in the human sense. While it can recombine ideas in useful ways, it does not produce firsthand experience, independent judgment, or new insight. Those elements still come from people.

Bottom line: AI generates language efficiently, but meaning, accuracy, and responsibility remain human tasks.

Where AI-generated content works well

AI-generated content is most effective when it supports existing workflows rather than trying to replace them. Used in clearly defined roles, it can reduce friction and speed up parts of the content process without compromising quality.

One strong use case is ideation and outlining. AI can help generate topic variations, organize rough structures, or surface angles that might otherwise be missed. This is especially useful at the planning stage, where speed and breadth matter more than precision.

AI also works well as draft support. It can expand bullet points into rough prose, suggest transitions, or help rephrase sections for clarity. In this role, the output is treated as a starting point, not a finished product, and is always reviewed and edited by a human.

Another effective application is updating and summarizing existing content. AI can assist in condensing long sections, adapting content for different formats, or identifying areas that may need refreshing. This can make maintenance more efficient without altering the underlying substance.

Bottom line: AI adds the most value when it accelerates thinking and execution, while humans retain control over meaning, accuracy, and judgment.

Where AI-generated content breaks down

AI-generated content struggles when tasks require judgment, originality, or accountability. These limits are not edge cases; they are central to credible content marketing.

One major weakness is original insight and experience. AI cannot draw from firsthand use, lived experience, or situational judgment. It can describe concepts, but it cannot evaluate them in context or explain why something worked in a specific situation. Content that depends on perspective or nuance suffers most here.

Accuracy is another risk. AI systems can produce statements that sound plausible but are factually incorrect or outdated. Because the language is fluent, errors are harder to spot without careful review. This makes unsupervised publishing especially risky in topics where precision matters.

Tone and judgment also break down. AI can imitate styles, but it does not understand audience sensitivity, ethical boundaries, or reputational risk. Without guidance, it may overstate claims, miss important caveats, or flatten complex ideas into generic advice.

Bottom line: AI is weakest where credibility depends on experience, accuracy, and judgment, which are the areas that matter most for trust.

AI-generated content and quality standards

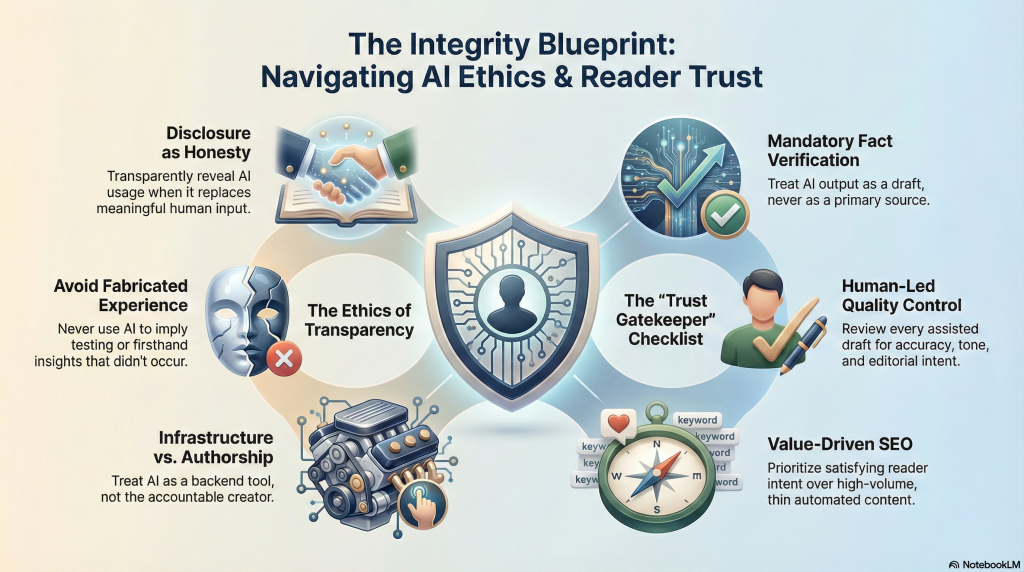

Quality standards are what determine whether AI-generated content is useful or harmful. Without clear expectations and review processes, AI output tends to default to what sounds right rather than what is right.

Human review is non-negotiable. Every AI-assisted draft should be checked for accuracy, completeness, and alignment with editorial intent. This includes verifying facts, validating examples, and ensuring claims are appropriately qualified. AI can assist with language, but it cannot be responsible for truth.

Consistency with editorial voice is another standard that requires human control. AI-generated text often drifts toward generic phrasing or inconsistent tone. Editing for clarity, structure, and voice ensures the content remains recognizable and trustworthy across articles.

Finally, sourcing and attribution matter. AI output should never be treated as a source. When factual claims or benchmarks are included, they must be supported by reliable references and, where relevant, dates. This reinforces accountability and protects long-term credibility.

Bottom line: quality in AI-generated content comes from standards and oversight, not from the tool itself.

SEO, trust, and AI-generated content

AI-generated content does not automatically help or harm SEO. Its impact depends on whether the content is genuinely useful, accurate, and aligned with reader intent. Search engines evaluate outcomes, not tools, which means quality signals still apply regardless of how content is produced.

Problems arise when AI is used to scale content without substance. Pages that repeat existing information, lack depth, or fail to resolve user questions tend to perform poorly over time. Automation at scale often amplifies thin coverage rather than authority.

Trust is the more fragile factor. Readers are quick to disengage when content feels generic, vague, or disconnected from real experience. Even when rankings hold temporarily, trust erosion reduces long-term engagement, return visits, and brand credibility.

Used carefully, AI can support SEO by improving structure, clarity, and coverage. Used carelessly, it can undermine both visibility and trust at the same time.

Bottom line: AI does not change what search engines or readers value. It only changes how easily poor content can be produced.

Ethics, disclosure, and reader trust

Ethical use of AI-generated content is about respecting the reader’s expectations. When people engage with content, they assume it has been created with care, judgment, and accountability. AI does not remove that responsibility from the publisher.

Disclosure becomes relevant when AI materially affects how content is produced or perceived. While not every use of AI requires explicit disclosure, transparency is important when automation replaces meaningful human input or when readers could reasonably assume otherwise. The goal is not to overexplain tools, but to avoid deception.

Avoiding harm is another ethical boundary. AI-generated content should not be used to fabricate experience, imply testing that did not occur, or present speculative information as fact. These practices damage trust quickly and are difficult to recover from once exposed.

Ultimately, trust is cumulative. Readers may not care whether AI assisted in drafting, but they do care whether the content is accurate, honest, and respectful of their time. Ethical standards ensure that AI supports those outcomes rather than undermining them.

Bottom line: ethical AI use protects the reader relationship, which is more valuable than any short-term efficiency gain.

Using AI responsibly in content marketing

Responsible use of AI in content marketing starts with clear role definition. AI should assist specific tasks within the workflow, not replace the thinking, judgment, or accountability required to publish credible content. When roles are vague, quality and trust tend to erode.

The most effective approach is to treat AI as an assistant. It can help with research organization, outlining, drafting support, and content maintenance, while humans remain responsible for strategy, accuracy, and final decisions. This keeps ownership of meaning and quality where it belongs.

Editorial controls are essential. Clear guidelines for when AI may be used, how outputs are reviewed, and who approves final content prevent inconsistency and misuse. These controls also make it easier to scale content production without sacrificing standards.

Finally, restraint matters. Not every task needs automation, and not every efficiency gain is worth the trade-off. Responsible use prioritizes long-term trust and clarity over short-term speed.

Bottom line: AI supports content marketing best when it is governed, reviewed, and kept in a clearly defined supporting role.

Conclusion

AI-generated content has changed how content is produced, but it has not changed what makes content valuable. Clarity, accuracy, judgment, and trust remain the deciding factors, regardless of the tools involved. AI can accelerate parts of the process, but it cannot take responsibility for outcomes.

Used thoughtfully, AI supports planning, drafting, and maintenance while freeing human effort for strategy and insight. Used carelessly, it amplifies errors, flattens originality, and weakens credibility at scale. The difference lies in standards, oversight, and restraint.

For content marketing to remain effective and trustworthy, AI must be treated as infrastructure, not authorship. When humans stay accountable for meaning and quality, AI becomes a useful ally rather than a liability.

Frequently Asked Questions

Is AI-generated content bad for SEO?

No. AI-generated content is evaluated the same way as any other content. Performance depends on usefulness, accuracy, and how well the content satisfies reader intent.

Should AI-generated content be disclosed?

Disclosure is appropriate when AI meaningfully replaces human input or could affect reader expectations. Routine assistance, such as drafting support or outlining, does not always require disclosure.

Can AI replace human writers?

No. AI can generate language, but it cannot provide judgment, experience, or accountability. Human oversight remains essential for credible content.

How should AI-generated content be reviewed?

All AI-assisted content should be fact-checked, edited for clarity and tone, and reviewed against editorial standards before publishing.

What are the biggest risks of using AI for content?

The main risks are inaccuracies, generic output, and erosion of trust when automation is used without clear governance or review.

0 Comments