Introduction

Large language models often produce correct answers without explaining how they arrived there. That can feel impressive, but it also creates problems. When reasoning stays hidden, it is harder to trust results, debug mistakes, or guide the model through complex tasks.

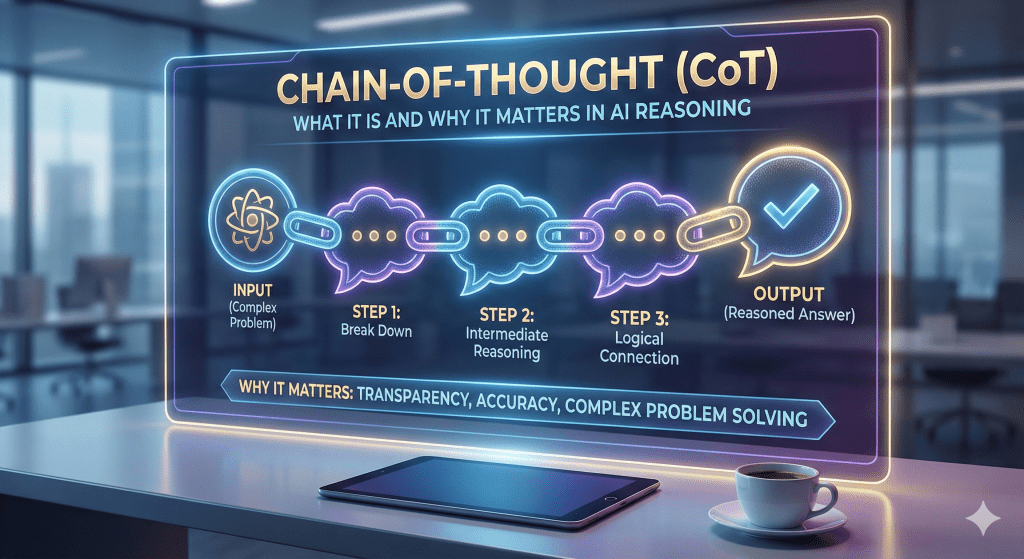

Chain-of-Thought (CoT) emerged as a way to address this gap. Instead of jumping straight to an answer, the model is encouraged to reason step by step. This approach improves performance on tasks that require logic, sequencing, or multi-step thinking.

This guide explains what Chain-of-Thought is, why it matters, and how it fits into modern AI prompting without turning reasoning into noise.

Key Takeaways

- Chain-of-Thought (CoT) is a reasoning approach, not a tool or feature.

- It encourages step-by-step thinking to improve accuracy on complex tasks.

- CoT works best for logic, math, and multi-stage reasoning problems.

- It is not a full prompt framework and does not replace structure.

- Modern best practice uses CoT internally without exposing raw reasoning.

Disclaimer: I am an independent Affiliate. The opinions expressed here are my own and are not official statements. If you follow a link and make a purchase, I may earn a commission.

What Chain-of-Thought (CoT) Actually Is

Chain-of-Thought (CoT) is a reasoning approach that encourages an AI model to work through a problem step by step before producing a final answer.

Instead of treating a task as a single jump from question to output, CoT frames the task as a sequence of intermediate steps. Each step builds toward the conclusion. This makes complex reasoning more reliable, especially when the answer depends on multiple conditions or calculations.

It is important to be precise here. CoT is not a setting you turn on. It is not a prompt template. And it is not a guarantee of correctness. It is a way of guiding how the model processes information internally.

At a conceptual level, CoT shifts the model from pattern matching alone to structured reasoning. When the model is prompted to think through a problem in stages, it is less likely to skip assumptions or collapse multiple decisions into one opaque leap.

That said, CoT does not define what the task is, how the response should be formatted, or what constraints apply. It only affects how the model reasons. Everything else still needs to be defined elsewhere in the prompt or article structure.

This distinction matters. CoT improves reasoning quality, but it does not replace clarity, intent, or structure. It works best when it supports those elements, not when it is treated as the entire solution.

Why Chain-of-Thought Emerged in AI Research

Chain-of-Thought did not appear because models lacked knowledge. It emerged because models struggled with reasoning depth on certain types of problems.

Early language models were strong at pattern recognition. They could generate fluent answers, but they often failed on tasks that required multiple logical steps. Math problems, symbolic reasoning, and conditional logic exposed this weakness.

Researchers noticed a pattern. When models were encouraged to articulate intermediate steps, accuracy improved. The model was less likely to skip assumptions or collapse multiple decisions into one opaque leap.

Chain-of-Thought formalized that observation.

Instead of treating reasoning as an invisible process, CoT made reasoning explicit during generation. This helped models stay aligned with the logic of the task, especially when the solution depended on order or cumulative decisions.

Another reason CoT emerged was evaluation. When reasoning steps were visible, researchers could inspect where errors occurred. This made it easier to understand failure modes and improve training methods.

Over time, CoT became a recognized concept in AI research. Not because it solved all reasoning problems, but because it reliably improved performance in specific domains.

That context matters. CoT was created to address a narrow but important limitation. It was never meant to be a universal prompting strategy.

How Chain-of-Thought Reasoning Works at a High Level

At a high level, Chain-of-Thought reasoning works by encouraging the model to move through a problem in ordered steps instead of producing an answer in one leap.

The model still receives the same question. What changes is how it processes the task internally during generation.

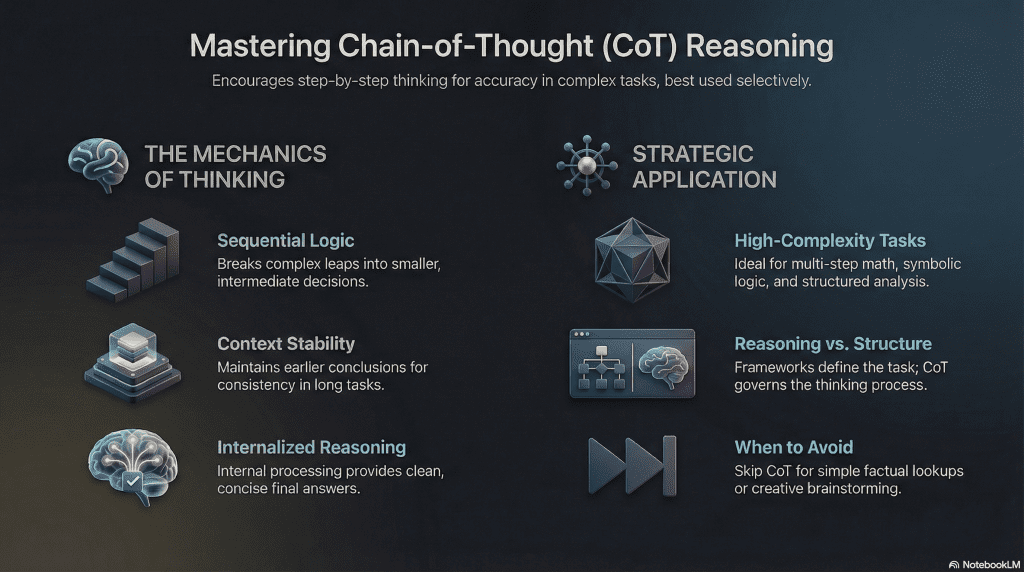

Breaking a Single Jump into Smaller Decisions

Without CoT, the model often compresses reasoning. It recognizes a pattern and outputs a result quickly. This can work for simple questions, but it breaks down when the task requires multiple dependent decisions.

Chain-of-Thought slows that process down.

By reasoning through intermediate steps, the model evaluates each decision before moving on. This reduces skipped assumptions and logical gaps, especially in tasks where one mistake compounds into a wrong answer.

Maintaining Context Across Steps

Another benefit of CoT is context stability.

When reasoning is step-based, the model keeps earlier conclusions active while working through later ones. This makes it less likely to contradict itself or lose track of conditions introduced earlier in the problem.

This is why CoT performs well in multi-step math, logic puzzles, and structured analysis. Each step reinforces the next.

Producing a Final Answer After Reasoning

Importantly, the goal of CoT is not the reasoning itself. The goal is a better final answer.

In modern usage, the model may reason internally while only presenting a clean conclusion. The reasoning supports accuracy, but it does not need to be shown to the reader.

This distinction matters for publishing and practical use. CoT improves outcomes by guiding thought, not by exposing every step.

When Chain-of-Thought Improves AI Output

Chain-of-Thought is most effective when the task requires reasoning across multiple steps. It does not improve every prompt. Its value appears when the model must hold context, apply logic, or reach a conclusion through dependent decisions.

Multi-Step Logic and Math Problems

CoT consistently improves performance on problems where one step depends on the previous one.

Examples include math word problems, logical deductions, and symbolic reasoning. In these cases, jumping directly to an answer increases the risk of error. Step-by-step reasoning reduces that risk by forcing the model to validate each decision along the way.

Tasks That Require Structured Analysis

Analytical tasks benefit from CoT when they involve comparison, prioritization, or evaluation.

For example, breaking down pros and cons, identifying patterns in data, or evaluating trade-offs all require ordered thinking. CoT helps the model avoid shallow summaries by moving through each factor deliberately.

Problems With Hidden Assumptions

Some questions look simple but hide assumptions.

Chain-of-Thought helps surface those assumptions during reasoning. By articulating intermediate steps, the model is more likely to notice missing information or conditional dependencies that would otherwise be skipped.

Situations Where Accuracy Matters More Than Speed

CoT is useful when correctness is more important than fast output.

In educational settings, research analysis, and complex planning tasks, the additional reasoning depth improves reliability. For quick lookups or creative brainstorming, that tradeoff is often unnecessary.

Used correctly, Chain-of-Thought improves output quality by slowing down reasoning, not by adding verbosity. It shines when the task demands care, structure, and logical consistency.

Where Chain-of-Thought Breaks Down

Chain-of-Thought is useful, but it is not universally helpful. In some situations, it adds friction without improving results. Understanding these limits is just as important as knowing when to use it.

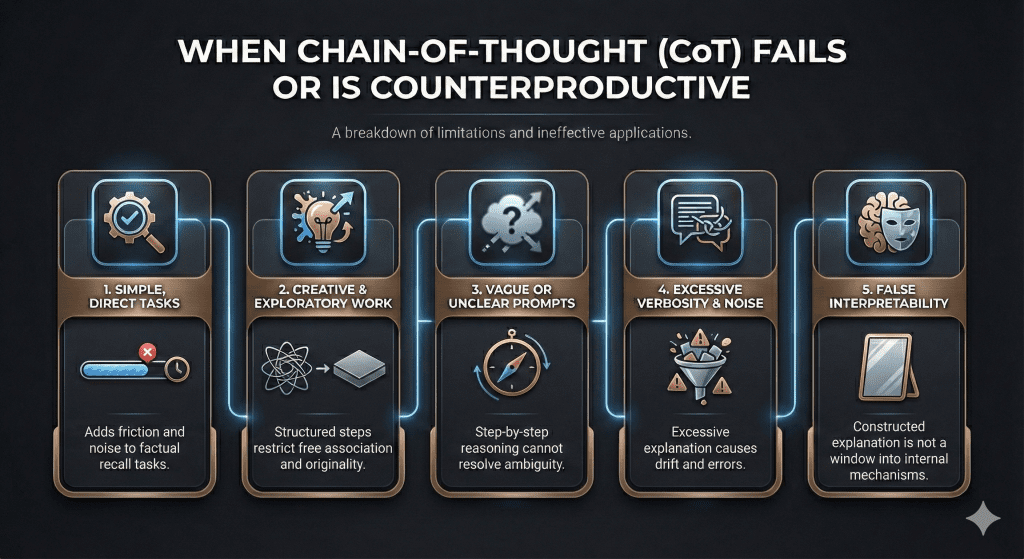

Simple or Direct Questions

For straightforward questions, CoT often adds no value.

If the task only requires recalling information or producing a short, factual answer, step-by-step reasoning can slow things down and clutter the response. The model already knows the answer. Asking it to reason through obvious steps does not improve accuracy.

In these cases, clarity of intent matters more than reasoning depth.

Creative and Exploratory Tasks

Creative work often benefits from looseness, not structure.

Brainstorming ideas, writing fiction, or generating marketing concepts usually improves when the model can move freely. Forcing explicit reasoning can flatten creativity and make outputs feel mechanical.

CoT is optimized for correctness, not originality.

Overly Long or Verbose Reasoning Chains

More reasoning is not always better.

When prompts encourage excessive step-by-step explanation, the model can lose focus. Long chains increase the risk of internal contradictions and unnecessary repetition. They can also distract from the final answer.

This is why modern best practice favors internal reasoning with concise outputs, rather than publishing full chains.

When Reasoning Becomes a Crutch

Another failure mode appears when CoT is used to compensate for unclear prompts.

If intent is vague or constraints are missing, step-by-step reasoning cannot fix the underlying problem. The model may reason carefully about the wrong task.

Chain-of-Thought supports clarity. It does not replace it.

In short, CoT breaks down when the task is simple, creative, or poorly defined. It works best as a precision tool, not a default setting.

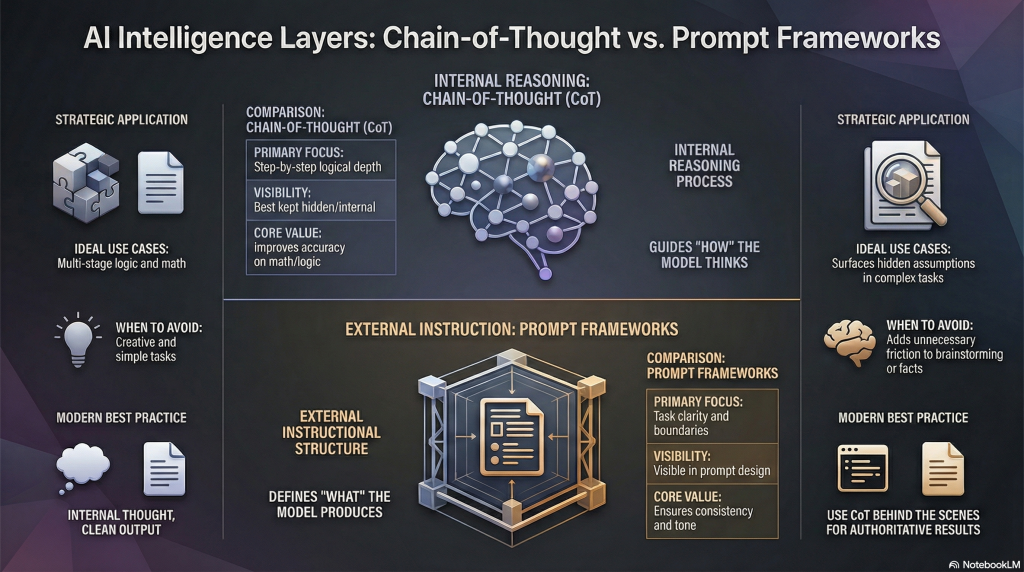

Chain-of-Thought vs Prompt Frameworks

Chain-of-Thought and prompt frameworks solve different problems, even though they are often discussed together. Confusing them leads to weak prompts and messy articles.

What Chain-of-Thought Focuses On

Chain-of-Thought focuses on how the model reasons.

It influences the internal thinking process by encouraging step-by-step logic. Its scope is narrow by design. It improves accuracy when a task depends on ordered reasoning, assumptions, or calculations.

CoT answers one question only. How should the model think through this problem?

It does not define goals, structure, tone, or boundaries.

What Prompt Frameworks Focus On

Prompt frameworks focus on how instructions are constructed.

A framework defines:

- What the task is.

- How the response should be shaped.

- What constraints apply.

- What success looks like.

Frameworks create consistency. They reduce ambiguity before reasoning even begins.

Where CoT improves thinking, frameworks improve communication.

Comparison of Prompting Strategies

To understand how these tools differ in practice, it helps to compare CoT against specific frameworks like RTF or CARE:

| Strategy | Primary Goal | Best For | Core Logic |

|---|---|---|---|

| Chain-of-Thought (CoT) | Mimic human reasoning steps. | Complex math, symbolic logic, and multi-step problems. | Breaks a problem into intermediate steps before the final answer. |

| RTF (Role-Task-Format) | Define professional context. | Professional emails, reports, or creative writing. | Assigns a persona, defines the job, and specifies the output style. |

| CARE (Context-Action-Result) | Provide comprehensive depth. | Business strategy or detailed explanations. | Sets the stage and provides a clear goal with a concrete example. |

| Tree-of-Thoughts (ToT) | Explore multiple solutions. | Strategic planning and creative brainstorming. | Branches out into different ideas and “prunes” the ones that don’t work. |

Why They Are Not Competing Ideas

Chain-of-Thought and prompt frameworks are not alternatives. They operate at different layers.

A helpful way to think about it:

- Prompt frameworks decide what the model is being asked to do.

- Chain-of-Thought influences how the model reasons while doing it.

You can use a framework without CoT. You can use CoT without a framework. The strongest results come from using them together, intentionally.

How They Work Together in Practice

In practice, a framework sets the structure. CoT is applied selectively inside that structure when deeper reasoning is required.

This prevents two common mistakes:

- Using CoT to compensate for unclear intent.

- Treating CoT as a complete prompting solution.

When roles stay clear, outputs become both accurate and controlled.

The Trade-offs

While combining them is powerful, there is a cost to consider:

Structure vs. Logic: Frameworks like RTF ensure the model doesn’t miss the “vibe” or formatting you want. CoT ensures the model doesn’t make a “hallucination” error in its math or logic.

Speed vs. Accuracy: CoT and Tree-of-Thoughts take longer to process and use more tokens. They are necessary for accuracy in hard tasks but overkill for simple requests.

How CoT Is Used in Practice Without Exposing Reasoning

As Chain-of-Thought became more widely used, a key best practice emerged. The reasoning improves results, but the reasoning does not need to be shown.

This distinction is critical for real-world use, especially in published content, products, and user-facing tools.

Internal Reasoning, External Clarity

In practice, CoT often operates behind the scenes.

The model reasons through intermediate steps internally, but only presents the final answer or a short explanation. This keeps outputs clean, readable, and authoritative while still benefiting from deeper reasoning.

For example, instead of showing every logical step, the response might present:

- A concise conclusion

- A short justification

- A clear recommendation

The reasoning still happened. It just was not exposed.

Why Exposing Full Chains Is Usually a Bad Idea

Publishing full chains of thought often creates more problems than it solves.

Long reasoning trails can:

- Overwhelm readers

- Reduce perceived confidence

- Introduce unnecessary complexity

- Expose errors that do not affect the final answer

For most audiences, clarity matters more than transparency at the reasoning level.

This is why modern guidance favors guided reasoning with concise outputs, not step-by-step transcripts.

Prompting for Better Results Without Showing Steps

In applied settings, CoT is often triggered indirectly.

Instead of asking the model to “think step by step,” prompts might:

- Ask for a structured answer

- Request a justified conclusion

- Require evaluation before recommendation

These cues encourage reasoning without forcing the model to narrate every step.

Why This Matters for Articles and Publishing

For articles, the same rule applies.

Readers want conclusions, not internal deliberation. CoT helps writers and models arrive at better answers, but the final content should feel smooth and intentional, not procedural.

When CoT is used correctly, the audience never notices it. They just experience clearer logic and stronger conclusions.

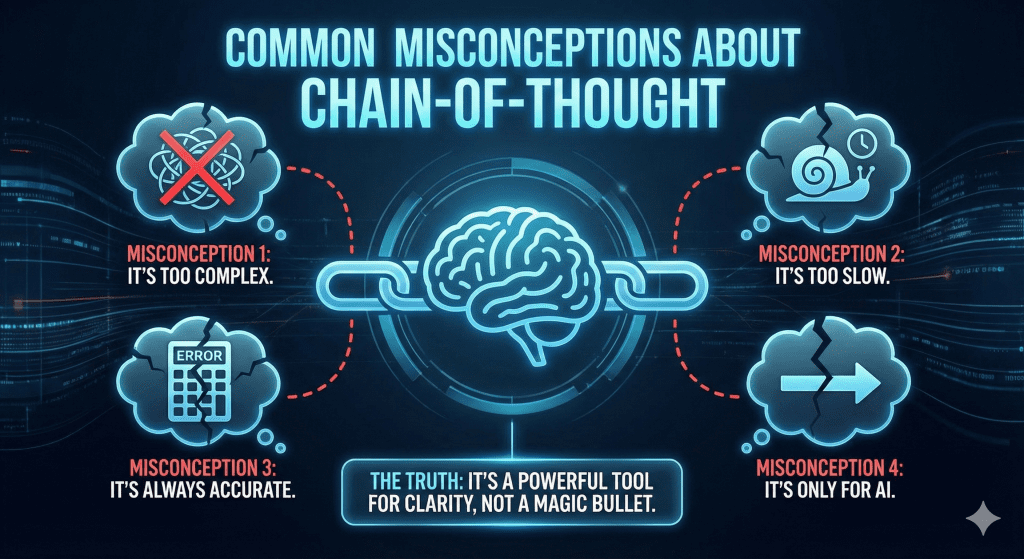

Common Misconceptions About Chain-of-Thought

Chain-of-Thought is widely discussed, but it is also widely misunderstood. These misconceptions often lead to misuse, poor results, or misplaced expectations.

“Chain-of-Thought Always Improves Output”

CoT does not improve every response.

It helps when a task requires reasoning across steps. It does very little for simple questions, factual lookups, or creative brainstorming. In those cases, it can slow the model down without improving accuracy.

Using CoT by default is a mistake. It is a precision tool, not a universal upgrade.

“More Reasoning Steps Mean Better Answers”

Longer chains are not better chains.

Excessive step-by-step reasoning can introduce noise, contradictions, and distraction. The goal is correct reasoning, not verbose reasoning. Once the model has enough structure to stay on track, additional steps often add diminishing returns.

Good CoT is efficient, not exhaustive.

“Chain-of-Thought Explains the Model’s True Thinking”

This is a critical misunderstanding.

The reasoning a model produces is a generated explanation, not a transparent window into how it truly arrived at an answer. CoT improves performance, but it does not reveal the model’s internal mechanisms in a human sense.

Treat CoT as a tool for guiding output quality, not as an interpretability guarantee.

“Chain-of-Thought Replaces Prompt Design”

CoT cannot fix unclear prompts.

If the task is vague, the goal is undefined, or constraints are missing, step-by-step reasoning only helps the model reason carefully about the wrong thing. Clear intent and structure must come first.

CoT supports good prompting. It does not replace it.

“Chain-of-Thought Should Always Be Shown”

Exposing full reasoning chains is rarely helpful for end users.

Most readers want conclusions, not deliberation. Showing every step can reduce confidence and readability without improving understanding. In most cases, reasoning should inform the answer, not dominate it.

Understanding what Chain-of-Thought is not makes it far easier to use correctly. When expectations are realistic, CoT becomes a powerful addition instead of a source of confusion.

Conclusion

Chain-of-Thought (CoT) is best understood as a reasoning aid, not a complete prompting solution. It helps AI models slow down, evaluate intermediate steps, and arrive at more reliable answers when tasks require logic, sequencing, or layered decisions.

Its value comes from when and how it is applied. CoT improves outcomes for complex reasoning tasks, but it adds little to simple questions and can even get in the way of creative or exploratory work. It does not define intent, structure responses, or set boundaries. Those responsibilities still belong to prompt design and higher-level frameworks.

For articles, products, and user-facing content, the key takeaway is restraint. Chain-of-Thought should strengthen conclusions, not dominate them. When used correctly, readers never see the reasoning process. They only experience clearer logic, stronger answers, and fewer errors.

In short, Chain-of-Thought is a powerful supporting concept. It works best as part of a larger system, applied deliberately, and kept mostly behind the scenes.

Frequently Asked Questions

Is Chain-of-Thought the same as asking the AI to “show its work”?

Not exactly. Asking to “show its work” is one way to trigger Chain-of-Thought, but CoT itself is broader. It refers to encouraging step-by-step reasoning during generation. In many modern uses, that reasoning happens internally and is not shown verbatim.

Do I need Chain-of-Thought for every AI task?

No. CoT is most useful for tasks that require logic, sequencing, or multi-step decisions. For simple questions, summaries, or creative brainstorming, it often adds no value and can slow things down.

Is Chain-of-Thought a prompt framework?

No. Chain-of-Thought is a reasoning technique. It influences how the model thinks through a problem, but it does not define intent, response structure, or constraints. Those elements belong to prompt frameworks.

Can Chain-of-Thought improve accuracy?

Yes, in the right contexts. CoT has been shown to improve accuracy on math problems, logical reasoning tasks, and structured analysis where intermediate steps matter.

Should articles or products expose full chains of thought?

Usually not. Full reasoning chains can overwhelm readers and reduce clarity. Best practice is to let CoT improve the answer behind the scenes, then present a clear, confident conclusion.

0 Comments